The OnLogic Blog: Edge Computing Articles & Insights

DEPEND ONLOGIC

Installing Fanless Computers: Important Dos and Don’ts

Fanless computers offer so many advantages including versatile mounting options, efficient power use, and enhanced reliability just to name a few.

APRIL 14 • 5 MIN READ

INDUSTRIAL IOT

Industrial Raspberry Pi: A Brief History and the Near Future

Intended to provide makers of all ages with a low-cost platform to learn the basics of coding, the first production Raspberry Pi was sold in 2012 for $30.

MARCH 31 • 4 MIN READ

OnLogic Headquarters – Creating a Sustainable Smart Building

Constructing a new building from the ground up gave us the rare opportunity to leverage some of our own computers and our talented engineering team to create not just a beautiful building, but one that [read more]

Turning a Staircase into an Art Installation

At the heart of OnLogic’s headquarters is an incredible staircase - what our team of OnLogicians call the “Monumental Stairs”. The stairs are not only attractive architecturally, they are actually an art installation that offer [read more]

Edge AI Architecture: The Edge is the Place to be for AI

AI seems to be part of every technology-related conversation lately. While for some time AI appeared more hype than reality, we now seem to be at an important inflection point. As one example of AI's [read more]

Predictive Maintenance – how AI and the IoT are Changing Machine Maintenance

The Internet of Things (IoT) and artificial intelligence (AI) are changing how we maintain equipment to save time, save money, and keep systems running. Continue reading to learn the different types of maintenance, including reactive [read more]

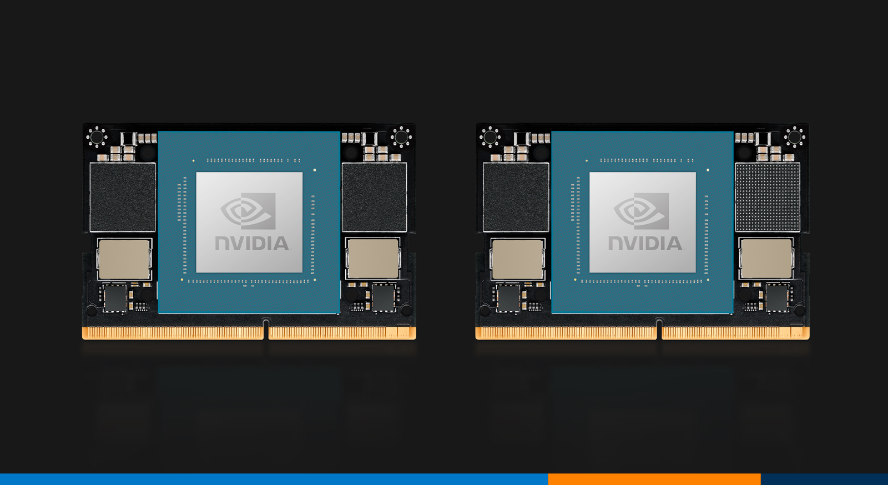

Deploying NVIDIA Jetson for AI-powered Automation

NVIDIA® Jetson™ has emerged as an early leader in the ongoing race for hardware platform supremacy to support the massive growth of artificial intelligence (AI) deployments. NVIDIA is already a household name in the world [read more]

Partnering with UVM Students on Sustainable Innovation

Developing and acting on initiatives for sustainable technology solutions is a team effort. At OnLogic, the core concepts around sustainability are woven into everything we do, but we're always on the lookout for ways we [read more]

OnLogic Headquarters – Creating a Sustainable Smart Building

Constructing a new building from the ground up gave us the rare opportunity to leverage some of our own computers and our talented engineering team to create not just a beautiful building, but one that [read more]

Turning a Staircase into an Art Installation

At the heart of OnLogic’s headquarters is an incredible staircase - what our team of OnLogicians call the “Monumental Stairs”. The stairs are not only attractive architecturally, they are actually an art installation that offer [read more]

Edge AI Architecture: The Edge is the Place to be for AI

AI seems to be part of every technology-related conversation lately. While for some time AI appeared more hype than reality, we now seem to be at an important inflection point. As one example of AI's [read more]

Predictive Maintenance – how AI and the IoT are Changing Machine Maintenance

The Internet of Things (IoT) and artificial intelligence (AI) are changing how we maintain equipment to save time, save money, and keep systems running. Continue reading to learn the different types of maintenance, including reactive [read more]

Deploying NVIDIA Jetson for AI-powered Automation

NVIDIA® Jetson™ has emerged as an early leader in the ongoing race for hardware platform supremacy to support the massive growth of artificial intelligence (AI) deployments. NVIDIA is already a household name in the world [read more]

Partnering with UVM Students on Sustainable Innovation

Developing and acting on initiatives for sustainable technology solutions is a team effort. At OnLogic, the core concepts around sustainability are woven into everything we do, but we're always on the lookout for ways we [read more]

OnLogic Industrial PCs: Designed to last. Built to order. Delivered in days. Visit our online store at OnLogic.com